Transformers in computer vision play a pivotal role in combining applications and building better models. Moreover, transformers were built initially to manage various NLP tasks. Although CNNs are dominating the market when it comes to executing vision tasks. However, in order to reduce architectural complexity, examine scalability, and efficiently train models developers use transformers.

Further, Transformers achieves state-of-the-art outcomes for NLP tasks. Moreover, there is a significant rise in the amalgamation of computer vision and NLP to build more efficient architectures. Therefore, there is an increase in the use of transformers in computer vision.

Hence, this article explains transformers’ role and use cases in computer vision.

Grip the Role and Emergence of Transformers in Computer Vision

What are Transformers?

Transformers refer to novel architectures that build models to interpret sequence-to-sequence tasks. It also manages and monitors long-range dependencies with simplicity. Moreover, it computes and evaluates outcomes for inputs and outputs without using sequence RNNs or convolutions.

Moreover, transformers were introduced in the paper “Attention is All You Need” and it states, “The Transformer is the first transduction model relying entirely on self-attention to compute representations of its input and output without using sequence-aligned RNNs or convolution.”

Transformers are also deep learning models that enable mechanisms of attention. It also weighs the significance of each part of the input data separately. It is also composed of multiple self-attention layers. Therefore, transformers help AI branches like NLP and Computer Vision.

The Transformer Model:

The transformer model was initially built to solve NLP Tasks that include language translation. Further, the transformer model incorporates an encoder-decoder format. Moreover, the encoder and the decoder include self-attention layers, linear layers, and residual connections. Therefore, it enables the system to separately perform aggregation and transformation.

- Encoder: For instance, in the language translation process, an encoder support inputs to interact with one another and create the representation for the token word. Hence, an encoder builds semantic similarities among the token words.

- Decoder: The decoder translates each word in a single method depending on the input and output evaluation in the system. That is to say, it provides the result in one word at a time by focusing on specific criteria of the encoder outputs. Hence, ensuring that the decoder evaluates the previous outputs and not the possibilities of outputs during training.

What is Computer Vision?

Computer Vision is a branch of Artificial Intelligence that helps computers and systems to procure information from digital images, videos, and other visual inputs. It also helps execute actions and make suggestions on the basis of information. Moreover, AI is the brain that processes information while computer vision works as the eyes that see, monitor, and comprehend visuals.

Computer Vision also uses CNNs to build most of its algorithms to classify objects in visual inputs.CNN refers to convolutional neural networks that analyze data in its receptive areas. CNNs analyze and process images based on a matrix of values. Moreover, the values are evaluated and categorized in 3D tensors, which refer to the various identifiers and detectors that stack images into sections.

Further, Computer Vision trains machines and devices to execute visual functions. However, it needs to procure results faster by using cameras, data, and various algorithms. Therefore, it trains devices to process large sets of images, videos, data, etc. faster and surpass human capabilities.

Common tasks that Computer Vision Systems Execute:

- Classifying Objects: Firstly, CV systems analyze visual content to classify the object on the image or video into a specific category.

- Identifying Objects: Moreover, CV systems analyze visual content and identify certain objects on the video and image.

- Tracking Objects: Further, the CV systems process videos and detects objects that fall into certain criteria to monitor their movements.

Why replace CNNs with Transformers in Computer Vision?

Although transformers were initially designed for NLP, researchers have a keen interest in building them for CV in spite of MLPs, CNNs, and RNNs. Moreover, transformers in Computer Vision enable the processing of various modalities while using similar processing blocks. Transformers also allow modeling long dependencies among input sequence elements and parallel processing.

Further, transformers efficiently use larger sets of data to build their memory and enable the processing of complex tasks. Moreover, it uses the Attention Mechanisms from the 2017 paper ‘Attention is all you need’. Therefore, Attention enables transformer architectures to evaluate inputs parallelly. Moreover, the Attention Mechanism provides enhancements in pivotal aspects of the input visual data. It also focuses on the relevant components of the visual input and fades out the remaining. Hence, with Attention Mechanisms, Transformers help build meaningful captions and sections for visual inputs.

Why is there a requirement for attention when CNNs are capable of feature extraction?

Although CNNs are popular in executing NLP tasks and provide efficiency and scalability, it does not work well with complex models. Moreover, the receptive field on CNNs relies on the size and filters as well as the convolutional layers. As a result, increasing the value of hyperparameters has a significant impact on the model. Therefore, this can lead to vanishing gradients or make the model impossible to train. CNNs also display limitations and tradeoffs on receptive fields while evaluating texts that it needs to capture. Hence, transformers outperform the computational efficiency and accuracy of CNNs by almost four times.

Transformers in Computer Vision: The rise of Vision Transformers (ViT)

Vision Transformers refers to techniques that replace convolutions in computer vision with Transformers. Moreover, Vision Transformers are self attention-centric transformer architecture that process images independent of CNNs. Further, using pure transformer architecture implements classification tasks on a sequence of images. It also uses self-attention layers to embed information for multiple images.

The term was coined in the research paper “An Image is Worth 16*16 Words: Transformers for Image Recognition at Scale” for the conference at ICLR 2021. The paper was developed and published by the author of the Google Research Brain Team.

Moreover, ViT trains the data to encode the relevancy of images to rebuild the format of the image. Hence, here are the layers of a ViT encoder:

- Multi-Head Self Attention Layer (MSP): This layer generally amalgamates each attention output linearly to the relevant directions. It also supports the training of local and global dependencies for an image.

- Multi-Layer Perceptrons Layer (MLP): Further, this layer includes a two-layer with Gaussian Error Linear Unit (GELU). Moreover, it is a supplement of feed-forward neural networks and consists of three components, namely the input, output, and a hidden layer. Hence, it processes various tasks like image identification, spam detection, identifying patterns, etc.

- Layer Norm (LN): As it does not include newer dependencies, a layer norm is added before every block in the training images. Hence, it enhances the training time and improves the performance of processing.

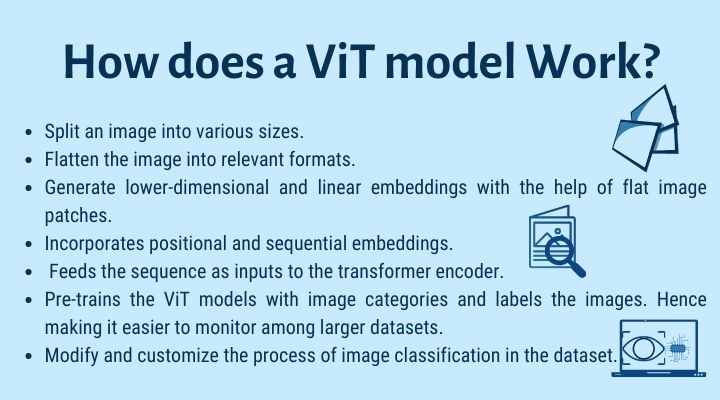

How does a ViT model Work?

Here are the following steps in a Vision Transformer Model:

- Firstly, it splits an image into various sizes.

- Further, it flattens the image into relevant formats.

- Moreover, it generates lower-dimensional and linear embeddings with the help of flat image patches.

- It also incorporates positional and sequential embeddings.

- It also feeds the sequence as inputs to the transformer encoder.

- Above all, it pre-trains the ViT models with image categories and labels the images. Hence making it easier to monitor among larger datasets.

- Lastly, it modifies and customizes the process of image classification in the dataset.

Use Cases and Applications of Vision Transformers

Vision Transformers popularly contribute to image recognition tasks like object identification, segmentation, image categorization, and action identification. It also supports generative modeling and multi-model tasks like grounding, question-answering, and reasoning.

Further, it enables video processing with activities like vision forecasting and activity detection. Therefore, image enhancement, colorization, and image resolution often use ViT.

ViT also helps with 3D analysis by segmenting and classifying the image. Moreover, ViT models prove that transformers in computer vision execute functions without biases. Further, transformers in computer vision can interpret images and classify the images’ features as local and global. Hence, ViT models offer higher precision and process images in a larger set, reducing processing time.

Conclusion:

In conclusion, Transformers in Computer Vision heightens image processing by regularising models and augmenting data. It also reduces complexity in the architecture by supporting scalability and efficiently training models.

You May Also Like to Read:

Here are the Top Deep Learning Architectures for Computer Vision

8 Best Applications of Computer Vision